It is an understatement & cliche to say that 2020 was a unique experience in our lives. Yet, I was fortunate to have access to some great books, newsletters, podcasts etc which taught me lot during this time. In this post, I am listing out some key learnings and few resources that taught me most in 2020.

Key Lessons I Learnt

On Health & Diet

- Insulin resistance is underlying cause for most heart related health ailments (especially for South Asians like me).

- Carbohydrate heavy diet leads to constant insulin spike. Over period it leads to two kinds of problems. Insulin resistance where muscle and other tissues stop responding to insulin and stop absorbing Glycogen from carbs or Low insulin where pancreas stops producing enough insulin. Both these causes, excess glycogen to enter blood stream or liver (where its converted to fat) leading to multiple chronic illnesses. – South Asian Health Solutions by Ronesh Sinha.

- Pay Less attention to overall cholesterol. More attention should be given to Triglycerides. Make sure you maintain Triglyceride-to-HDL ratio < 3.0

- Do bodyweight exercises at-least 1-2 times a week. Incorporate interval/HIIT training twice a week.

- High stress levels, irregular sleep patterns, low Vitamin D can accelerate insulin resistance.

- Shift carb intake to post work-out periods to allow muscles to use for energy instead of carbs shifting to fat cell storage. Workout before breakfast is effective for this reason.

- Avoid processed foods, sugary drinks or any man-made foods (our natural body evolution did not prepare to consume those). Moderate the consumption of starchy foods, grains and legumes. Prefer low glycemic carbs over higher ones.

On Money, Finances & Investing

- Live below means, not within means. In other words, try to limit life-style creep with income gain. The hardest financial skill is getting the goalpost to stop moving. Increasing lifestyle with income is never ending hedonic treadmill.

- Saving money can give you more control over how you spend your time in the future. Time is the most valuable resource. Money is just a means to buy time.

- Good investing isn’t necessarily about earning the highest returns through one off hits. It is about compounding above-average returns over a long term. Be patient!

- Sell down to a point where you can sleep better. Health above wealth.

- Equanimity & Humility: Leave Fear of missing out. Don’t act in haste. Have humility to acknowledge blind spots & tail risk events and don’t let greed wipe out your wealth.

On Parenting

- Kids need autonomy, sense of competence and care/relatedness.

- Kids should be encouraged to listen to their own intuition, to be spontaneous, to be creative in thoughts and actions. This gives them autonomy and willingness to try and not have fear of failure.

- Discipline over punishment. Discipline means teaching them consequences of actions with love. This also tells them you care. Threats and punishment breeds a sense of authority and can hurt their self confidence.

- Help kids reason through failure (even for small tasks) and appreciate the effort. This develops learning mentality and confidence that they can learn anything.

On Leadership

- Caring personally and Challenging directly builds trust and opens communication lines. Be easy on the person. Be tough on the problem.

- Good leadership is ability to balance Directing & Supporting with Coaching & Delegation.

- Good communication is about explaining why, how and what behind a Vision. This inspires action and fosters innovation.

On Decision Making

- Types of Decisions: Break decisions into one way doors (irreversible ones) and two way doors (reversible ones). Be slow and consensus driven with one way doors, and quick and individual driven with two way doors. Most firms think every decision is a one way door and end up being slow. – Jeff Bezos.

- Avoid Trap of Perfection: A good plan, violently executed now, is better than a perfect plan later.

- Escalate fundamental disagreements early

- Breaking Decision Paralysis: When two options are so close then its likely you wont regret either one in future.

- Tools: preferences, probabilities and payoffs over pros/cons list – How to Decide by Anne Duke

On Markets & Business Strategy

- Future monopolies never look like the current ones. Best way to compete is to pick a narrow orthogonal market to existing Monopoly and create immense value for users/customers. Google did not start out to as Yahoo clone, Facebook did not start out as New York Times competitor. – The Greatest game by Jeff Booth

- Competing in new market is advantageous to disrupters because its almost always going to be expensive and self-destructive to compete for existing companies – Intel’s Disruption by Steven Sinofsky.

- In enterprise software up-selling to existing customer is easier than acquiring new customer. A collection of good enough tools are integrated together is much more valuable proposition for IT department than tools which work great individually. This dynamic helps Microsoft Teams compete well with Slack despite Slack’s superior technology. – Salesforce Acquires Slack by Stratechery

- Companies/Industries which have long time gap between generating supply and creating demand are more susceptible to natural disasters. Airlines need to front load supply of planes and recover cost from passengers in future, hotels have to pay for real estate and inventory first before recovering cost from travelers. These are the industries that suffered most during the pandemic.

Books

These are the books that I learnt most from in 2020.

This book is simple, short and effective at explaining why and how to optimize for long term. It introduces three main ideas – thinking through the second-order effects, learning how to best motivate ourselves, and becoming experts at delaying gratification as tools to help us play a long term game.

This is the best book I read on health and diet thus far. This book taught me lot about impact of carbs vs fat, how insulin plays a huge role in multiple health problems. Book explains how reducing carb intake, with regular exercises can most common chronic issues like diabetes, cardiac arrest and even reduce risk of cancer.

Also it is a great companion book to Thomas DeLauer and Dr. Eric Berg DC youtube series on intermittent fasting.

This is a book teaches how to balance being nice and giving an effective feedback. Author explains the concept of Caring Personally and Challenging Directly to build trust and enables open communication. It has some great tools and concepts which I personally found useful in my job as an Engineering Manager.

Decision making is a complex subject. This book simplifies this into few simple tools like – avoiding resulting, avoiding hindsight bias, using preferences, probabilities and payoffs as opposed to pros/cons. I personally found these tools to be easy to use and practical. I have even used them in some of them big decisions I had to make in 2020.

This book unlike most other investment books teaches how we should think about money, expenses, life style choices, retirement, value of time, compounding. An excerpt from the book – “The premise of this book is that doing well with money has a little to do with how smart you are and a lot to do with how you behave. And behavior is hard to teach, even to really smart people.”

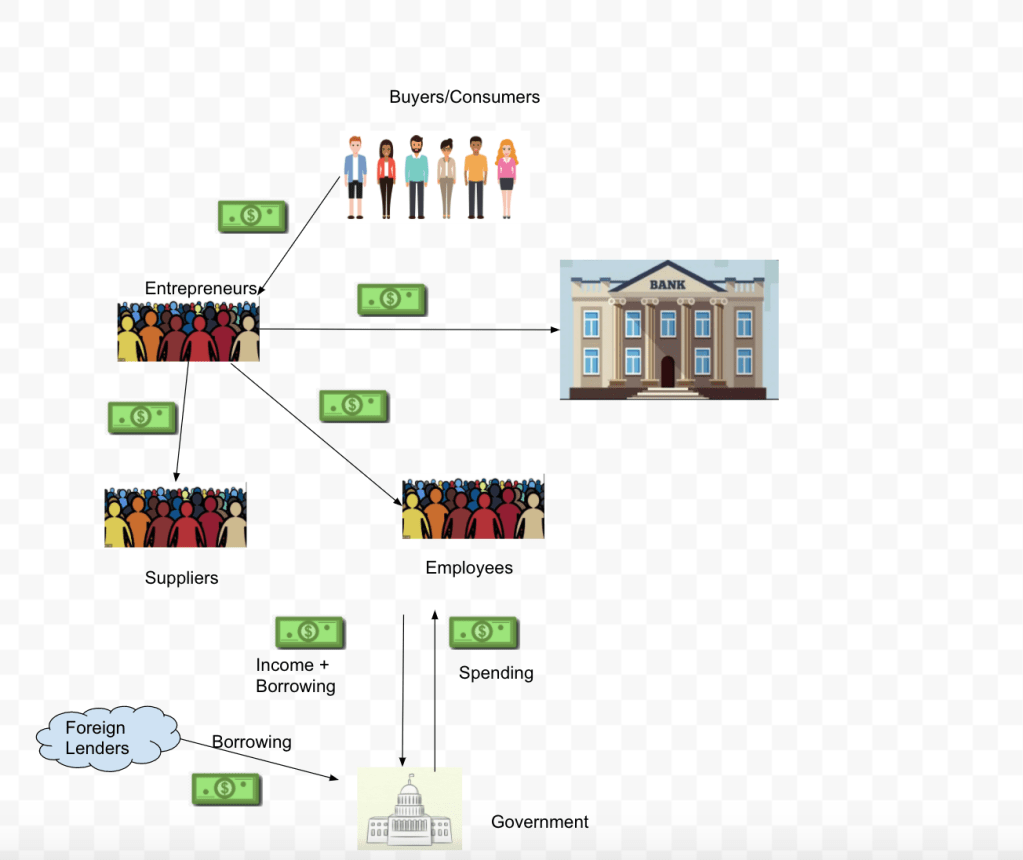

Technology is by nature deflationary. Example take a look at Iphone. For the price of $600 dollars it bundles a computer, camera, phone, screen (tv) and others. But monetary policies of all of the central banks are inflationary. In this book, author explains the impact of these policies on society (two class system of haves and have nots), politics, economy and danger it poses.

Podcasts

These are the podcasts that influenced my thinking most in 2020.

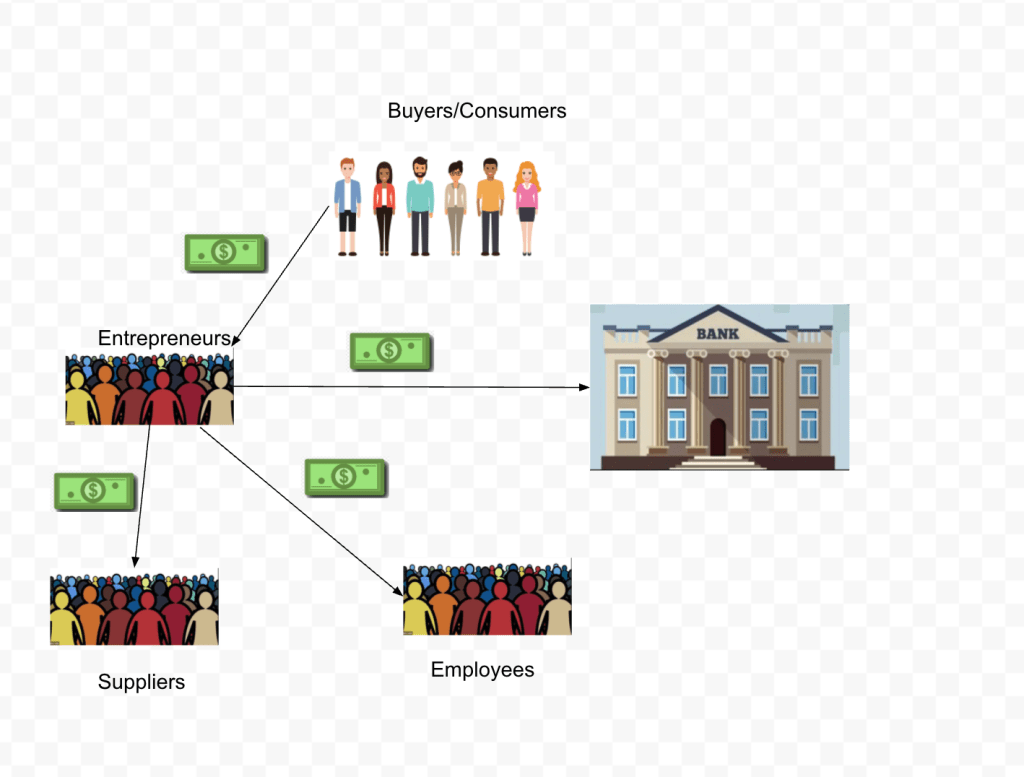

This is an excellent resources on business building and investing. It has great conversations with founders and investors walking through their journey. I learn a lot from their episodes on market dynamics, disruption, business moats, incentives in multi sided market place etc.

Great podcast on technology and its impact on society, regulation and frameworks for regulating tech.

I have been a long time listener of this show. This podcast is best one out there when it comes to focusing on fundamentals, understanding market and great education on investing. Especially in 2020, where market movements have been crazy, this podcast was irreplaceable for me.

Blogs/Newsletters

Here are some news letters that influenced my thinking most in 2020.

I have been a long time reader of this blog. This is one of the best resource in understanding business, strategy, technology and internet’s impact on society. Apart from that Ben also has great thoughts on how to regulate technology.

This newsletter covers two of my interests – Finance & Technology. Both business landscape and market dynamics have take a huge inflection in 2020 as distributed work and stay-at-home became prominent. Byrne does a excellent job of put these changes into a larger perspective. It is a great companion read to stratechery.

2020 was a year of Covid. There has been a lot of information but I found Zeynep’s writing to be single most authoritative source in understanding Covid, its developments, public policy around it, vaccine etc.

Thoughts from legendary investor. Bigger picture understanding of market dynamics and behaviors during this pandemic.